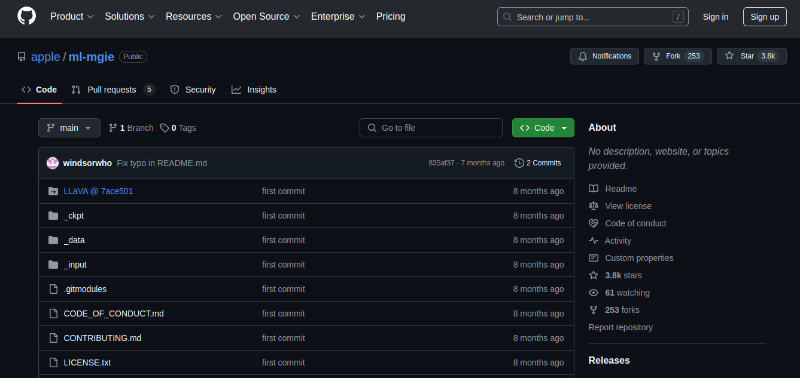

Apple's ML-MGIE stands for Multimodal MLE (Maximum Likelihood Estimation) Guided Image Editing. It's a system focused on improving image editing through the power of large language models (LLMs). Here's a breakdown of what the project offers: Instruction-based Editing: ML-MGIE allows users to edit images using natural language instructions instead of complex tools or region selection. This simplifies the editing process for those less familiar with image editing software. LLM Guidance: The system leverages LLMs to understand the user's editing instructions and translate them into specific editing actions. This allows for more precise and nuanced control over image manipulation. Expressive Instructions: ML-MGIE seems to be designed to handle more complex or descriptive instructions compared to simpler editing tools. For instance, you might instruct it to "make the sky more dramatic" or "add a playful puppy to the beach scene".