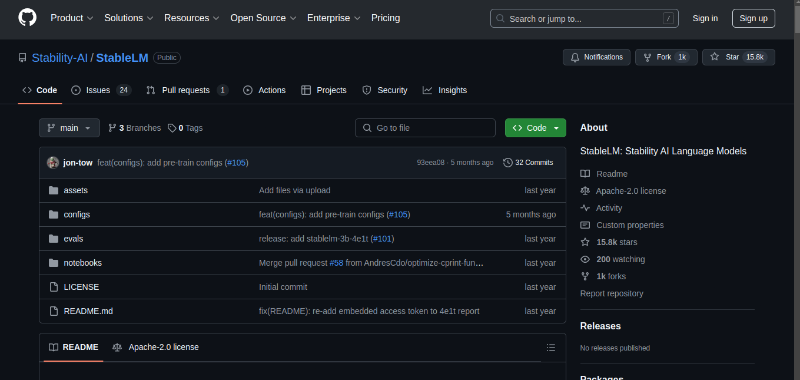

StableLM is a series of language models developed by Stability AI. The document details the StableLM-3B-4E1T model, a 3 billion parameter decoder-only transformer trained on a filtered mixture of open-source large-scale datasets. It achieves state-of-the-art performance for open-source models at the 3B parameter scale. StableLM models are also competitive with many contemporary 7B models.